Expectations vs. Reality: Language Models don't act like humans, despite our expectations

Published 07-23-2024

10533

Expectations vs. Reality: Language Models don't act like humans, despite our expectations

As artificial intelligence continues to advance, language models like GPT-4 have become increasingly prevalent in our daily lives. From virtual assistants to customer support chatbots, these models are designed to interact with us in a seemingly human-like manner. However, a significant gap exists between our expectations and the reality of how these language models function. This article explores why people might expect language models to behave like humans, provides examples of scenarios where these expectations lead to misunderstandings or disappointments, and highlights the algorithmic nature and limitations of these AI systems.

Why People Expect Language Models to Behave Like Humans

One of the primary reasons people expect language models to behave like humans is the portrayal of AI in media and pop culture. Movies, TV shows, and books often depict AI and language models with human-like qualities, creating a skewed perception of their capabilities. For instance, the movie "Her" features an AI operating system that engages in deep, emotional conversations with its user, fostering an illusion that AI can possess human-like understanding and empathy. Similarly, TV shows like "Westworld" and "Black Mirror" explore scenarios where AI entities exhibit human behaviors and emotions, further embedding the idea that AI can be indistinguishable from humans. These portrayals, while entertaining, are far from the current reality of language models. The gap between fiction and reality often leads to unrealistic expectations about what AI can do, resulting in disappointment when these expectations are not met.

Secondly, tech companies also play a role in shaping public perception by marketing language models as highly capable, human-like assistants. Brands like Apple, Google, and Amazon promote their virtual assistants (Siri, Google Assistant, and Alexa, respectively) with the promise of seamless interaction and intuitive responses. The branding of these products often emphasizes their ability to understand and respond to complex queries, creating an image of AI as reliable, human-like companions. This marketing approach, while effective in driving adoption, can be misleading. It sets high expectations that these language models may not always meet, particularly in situations requiring deep contextual understanding or emotional intelligence.

Lastly, anthropomorphism (or the attribution of human traits to non-human entities) is a natural human tendency that significantly influences our interactions with AI. Humans have a psychological inclination to see patterns and assign human characteristics to objects and animals, and this extends to technology as well. When interacting with language models, people often unconsciously project human-like attributes onto them, expecting them to understand and respond as a human would. This psychological comfort in interacting with AI that appears human-like can enhance user experience, but it also creates unrealistic expectations. When language models fail to meet these expectations, it can lead to frustration and a sense of betrayal.

Scenarios Where Expectations Lead to Misunderstandings or Disappointments

One of the most common applications of language models is in customer service and support. Companies deploy chatbots to handle customer queries, aiming to provide quick and efficient assistance. However, when language models fail to understand complex issues or provide contextually relevant responses, it can lead to significant frustration for users. For instance, a customer seeking support for a technical issue might encounter a chatbot that repeatedly provides irrelevant solutions or misunderstands the problem entirely. This lack of human empathy and problem-solving ability often results in a poor customer experience, highlighting the limitations of language models in handling nuanced and emotionally charged interactions.

Also, personal assistant AI, such as Siri, Google Assistant, and Alexa, are marketed as helpful companions capable of managing daily tasks and answering a wide range of questions. However, users frequently experience disappointment when these assistants fail to provide accurate or contextually appropriate responses. For example, asking a virtual assistant for a recommendation on a personal issue or seeking advice on a sensitive topic often yields generic or irrelevant answers. These assistants lack the ability to truly understand the emotional weight of a user's query, leading to a gap between expectation and reality.

Additionally, language models are increasingly being used for content creation and writing, from generating blog posts to composing emails. While these models can produce coherent text, they often lack the depth, creativity, and originality that human writers bring to their work. A writer using an AI to draft an article might find the generated content to be repetitive, lacking in objecitve argumentation, or devoid of the personal touch that engages readers. This limitation underscores the reality that, despite advancements, language models are not yet capable of replicating the full spectrum of human creativity and critical thinking.

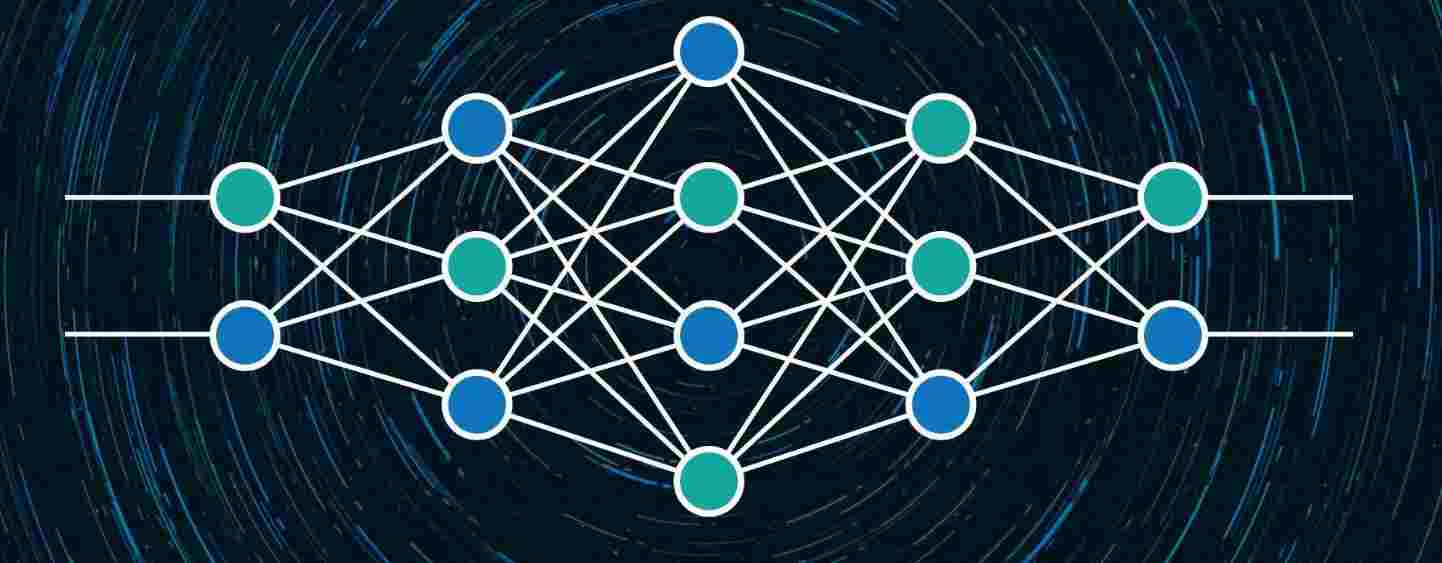

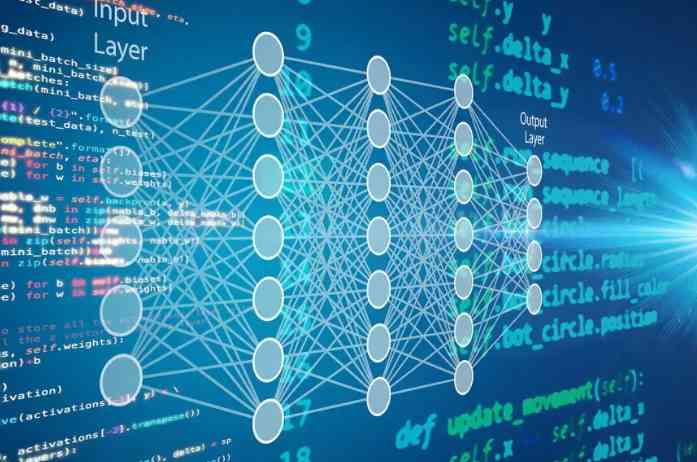

The Algorithmic Nature of Language Models

To understand why language models fall short of human-like behavior, it's essential to grasp the basics of how they work. Language models like GPT-3 and GPT-4 are based on machine learning algorithms that analyze vast amounts of text data to identify patterns and generate responses. These models do not understand language in the way humans do; instead, they rely on statistical associations between words and phrases. When a language model generates a response, it predicts the next word in a sequence based on the probability distributions learned during training. This process, while powerful, is fundamentally different from human cognition, which involves understanding context, emotions, and intent.

One of the critical limitations of language models is their struggle to understand context and nuance. Human communication is rich with implicit meanings, idioms, cultural references, and emotional subtext, all of which are challenging for AI to grasp fully. For example, consider the idiom "kick the bucket." A human understands this to mean "to die," but a language model might interpret it literally without additional context. Similarly, cultural references that are clear to a human might be lost on an AI, leading to responses that miss the mark or seem out of place.

Ethical Considerations and Misuse

As language models become more integrated into various applications, there is a growing risk of over-reliance on AI for tasks that require human judgment and empathy. This reliance can lead to ethical concerns, particularly when AI is used in sensitive areas such as healthcare, legal advice, or mental health support. For instance, using AI for mental health support can be problematic if users expect the language model to provide empathetic and insightful advice. The lack of genuine understanding and emotional intelligence in AI can result in inappropriate or harmful responses, underscoring the need for human oversight in these critical areas.

The lack of genuine understanding and emotional intelligence in AI can result in inappropriate or harmful responses, underscoring the need for human oversight in these critical areas.

The anthropomorphism and marketing strategies discussed earlier can mislead users into believing that language models possess human-like understanding. This misconception can have serious consequences, particularly when users turn to AI for important decisions or support. For example, a user seeking legal advice from an AI chatbot might assume the information provided is as reliable and contextual as that from a human lawyer. However, the AI's responses are based on patterns in the data it was trained on, not a deep understanding of legal principles or the specificities of the user's situation. This can lead to misguided decisions and potential harm.

The Importance of Human Oversight

Given the limitations of language models, it is crucial to balance AI and human interaction in applications where context and empathy are essential. Human oversight can help ensure that AI is used effectively while mitigating the risks of over-reliance and misunderstanding. In customer service, for instance, combining AI chatbots with human agents can provide the best of both worlds. The chatbot can handle straightforward queries, while human agents step in for complex or emotionally charged issues, ensuring that users receive appropriate and empathetic support.

Educating users about the capabilities and limitations of language models is essential for fostering realistic expectations. Users should understand that while AI can be a powerful tool, it is not a substitute for human judgment and expertise. Tech companies and developers can play a role in this education by providing clear information about what their AI products can and cannot do. Transparency in AI development and deployment can help users make informed decisions about when and how to use AI effectively.

In summary, language models have made significant strides in recent years, becoming valuable tools in various applications. However, the expectation that these models behave like humans often leads to misunderstandings and disappointments. By understanding the algorithmic nature and limitations of language models, we can set more realistic expectations and use AI more effectively. Recognizing the differences between language models and human behavior is crucial for leveraging AI's strengths while acknowledging its weaknesses. As AI continues to evolve, it is essential to maintain a balance between AI and human interaction, ensuring that we harness the benefits of technology without losing sight of the unique qualities that make us human.

Further Readings

- "How Spike Jonze’s “Her” is the perfect explainer ..." - A Medium article by Andrew DeVigal that explores the fascinating current status of AI as well as the possible paths it can take us.

- "Westworld" (2016) - A TV series depicting AI with human-like characteristics.

- "Black Mirror" (2011) - A TV series examining the dark side of technology and AI.

- "Understanding The Limitations Of AI (Artificial Intelligence)" - An article by Mark Levis in Medium discussing the constraints of current AI technology.

- "Ethical concerns mount as AI takes bigger decision-making role in more industries" - An op-ed exploring the ethical implications of AI deployment in various fields.

- "AI anthropomorphism and its effect on users' self-congruence and self–AI integration: A theoretical framework and research agenda" - A study on how human tendencies to anthropomorphize influence our interaction with technology.